Stop blindly trusting who's on the other side of the screen.

With GenAI anyone can look and sound like you.

Bring zero-trust security to the human layer and easily stop deepfakes, imposter candidates, fraud and insider threats before the damage is done.

The signals we use to trust identity are broken

Identity is still something we assume based on what we see and hear.

attacks continue

anyone now

Impersonations now look and sound legitimate.

adapted

It does not verify the human on the screen or phone.

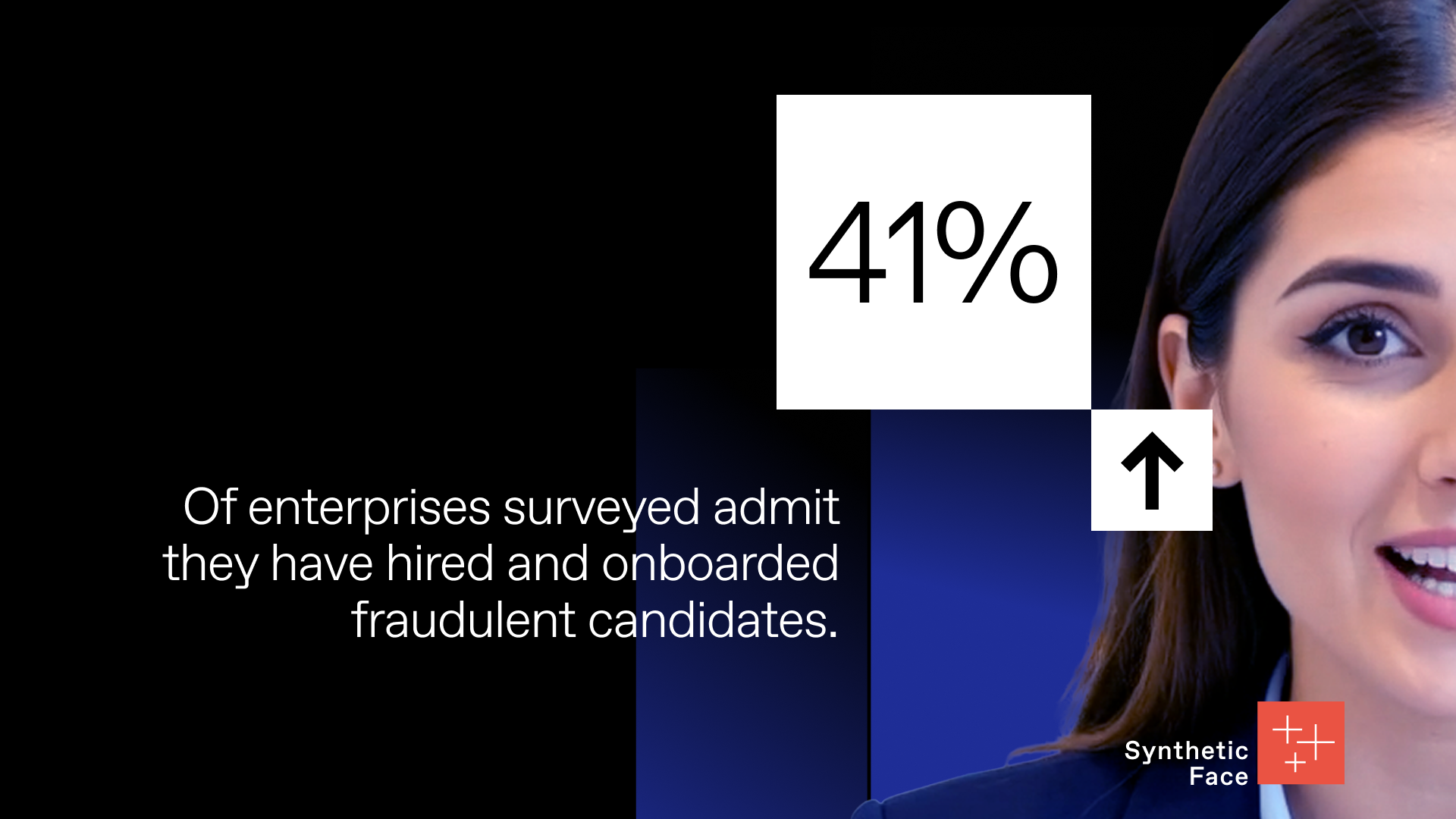

Identity attacks are already inside your enterprise

Source: Gartner

Source: GetReal Security Study

Source: Gartner

A Digital Trust and Authenticity Platform (DTAP)

GetReal is the only multimodal identity defense to verify the authenticity of people in files and real-time digital interactions. By combining content analysis, continuous authentication, and adaptive policy enforcement, GetReal protects trust where business actually happens.

Three identity attacks your enterprise must stop now

Fraudulent candidates are getting hired

Deepfake interviews, synthetic voices, and fabricated identities are slipping through enterprise hiring and onboarding workflows.

Prevent candidate fraud

Trusted employees are being impersonated

Attackers are launching social engineering attacks by cloning executive faces and voices to manipulate employees into approving payments, granting access, and bypassing controls.

Secure high-risk interactions

Account recovery is being exploited

Bad actors are hijacking employee and customer accounts during live helpdesk interactions, and gaining access with full legitimacy.

Secure account recovery

Our DNA brings identity defense to the content layer

Content Credential Analysis

We scan the content to verify authenticity, origin, and edits by identifying embedded signatures, watermarks, or C2PA credentials.

Pixel Analysis

We examine the content for pixel-level signals, compression artifacts, and inconsistencies from editing software.

Physical Analysis

We analyze the image’s physical environment for inconsistencies with the real world.

Provenance Analysis

We examine the recorded journey and packaging of content for additional context.

Semantic Analysis

We analyze the content for contextual meaning and coherence.

Human Signals Analysis

We inspect content for faces and other human attributes to run more targeted analysis.

Biometric Analysis

We conduct identity-specific analysis through face and voice modelling.

Behavioral Analysis

We compare patterns in human behavior and interactions to detect inconsistencies.

Environmental Analysis

We assess physical surroundings for context and 3D authenticity.

Timeline of deepfake deception

Enterprise-grade detection and mitigation of malicious generative AI threats

Latest from GetReal Security

Video

December 2025

As recruiting moved online, a new class of adversary followed – using AI to fabricate identities, pass interviews, and infiltrate companies at scale. In some cases, these aren’t just scammers. They’re nation-state operatives. In this investigation, we look at how deepfake candidates are already moving through enterprise hiring pipelines – and why traditional checks no longer work.

00:07:57

Go to resources to see more news on what is happening at GetReal.